The Data

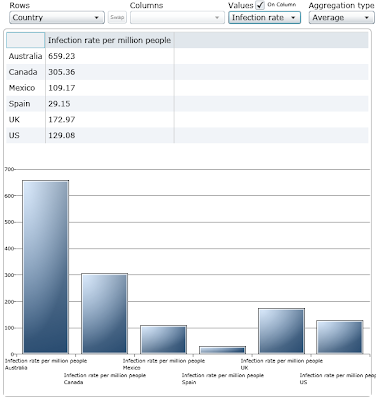

Let's have a look at the data for 6 countries:- Australia, Canada, Mexico, Spain, UK and US. The data data shows the situation as of the 17th of July, 2009.

We can clearly see that the US has a very large number of confirmed cases. This is perhaps not the best metric since the US has a large, well connected (roads, air-travel and rail) population. More rural countries with less inter-city connectivity (eg. Spain) would probably see the number of cases be smaller.

A normalised infection rate will give us a better handle on the real extent of the spread of the virus.

Of course, the infection rate will change over time. Smaller populations are likely to achieve their maximum infection rate sooner than larger ones. Perhaps this is a factor in explaining the differences between, say, Australia (21,855,000) and the UK (61,612,300).

As unpleasant as it is to suffer from flu symptoms, what we're really afraid of is dying. So let's have a look at the deaths per million population in these countries. Unfortunately, what we see above still has some time dependant factor to it. As the number of cases increases then so to will the number of deaths. The above method doesn't help us to compare the situation across countries.

Unfortunately, what we see above still has some time dependant factor to it. As the number of cases increases then so to will the number of deaths. The above method doesn't help us to compare the situation across countries.

What we should be measuring is the number of infections that lead to death. Although this will still be somewhat dependant on the sample size it will at least give us a first order summary of what the infection-to-death cases look like as well as some comparison between the countries. We are assuming that each country has the same strain of the virus so that a given equivalent person in any of the countries will have the same mortality rate due to the virus. Extrinsic factors such as accessibility to health care level and general health of the population will also affect the results this is as good a high level overview as any other.

As ever, this isn't going to be much of a conclusion. Panic is a very personal reaction. In the US seasonal flu accounts for over 100 deaths per million of population. As you can see above (3rd diagram down), swine flu is currently at 0.84 deaths per million of population. I couldn't find any data on the infection-to-death rate for seasonal flu due to the fact that every web search serves up swine flu data. I'll just add it to the other causes of death that I'm not worried about.

Some more perspective:- Here in Spain, suicide accounts for 160 deaths-per-million population... and I'm not planning on catching that either!

Shameless plug:- All images diagrams were created using the DatalightProject's online statistics tool.